First, the DeiC Interactive HPC consortium secured the most powerful hardware for AI: NVIDIA H100 GPUs, which are hosted at the SDU data center and available via the UCloud platform. Now, we are thrilled to announce the launch of two applications for AI on UCloud: Chat UI and Text Generation. These are the first chat applications of their kind on UCloud, designed to foster AI research and innovation, with a particular focus on large language models (LLMs). More apps for AI will follow soon!

Chat UI

Finally a way to use large language models (LLMs) for sensitive data!

Our new Chat UI app is an open-source implementation of a ChatGPT-like web application, designed primarily to run Ollama as a backend. Ollama is an open-source project that allows you to run LLMs on your local machine. Via the ChatUI app on UCloud, you can run Ollama and other open source models – some of which are rated as good as the ones from Microsoft and OpenAI – on the UCloud hardware. This has several advantages, including the fact that you can process sensitive data:

“With Ollama, you have access to a catalog of open source large language models, which you can download for free. Via the ChatUI app, the user can download and run one of these models directly on UCloud. This means that everything works locally and you can use this to process information, even if it is sensitive. You can be sure that you don’t send any information to external servers,” says Emiliano Molinaro, team leader for research support at the SDU eScience Center.

Emiliano Molinaro has been responsible for the implementation of the two recent AI apps on UCloud.

Chat UI also interfaces with OpenAI GPT models and supports semantic search on document files and web pages. This makes it a versatile tool for interactive communication and advanced document querying. You can also save your chat history and all the models you have downloaded – all in the safe, ISO 27001-certified environment that UCloud offers.

Text Generation

Fine-tune LLMs to suit your needs!

For our more advanced AI-users, the Text Generation app is a powerful web application that supports multiple backends. Text Generation enables the execution of any model hosted on Hugging Face, a repository of all open-source LLMs that can be downloaded for free. It also allows the application of LoRA (Low-Rank Adaptation) to fine-tune LLMs, making it an essential tool for researchers and developers working on custom language models and AI applications.

“Text Generation is a very nice interface that offers you a good platform for customizing the responses of large language models. One could fine-tune a model, e.g. to specialize in a specific language, research area or task,” says Emiliano Molinaro.

Initiatives such as the Danish Foundation Models (DFM) and the entirely new Dansk Sprogmodel Konsortium will be using AI resources on UCloud via the DeiC Interactive HPC service.

Model-as-a-Service

Both ChatUI and Text Generation can also function as inference LLM servers, accessible via API. This Model-as-a-Service (MaaS) solution provides developers access to the latest foundation models from leading AI innovators, allowing them to build generative AI apps using inference APIs and hosted fine-tuned models.

“With this feature, users can give others the possibility to interact with large language models that are already set up for them even if these people do not have an account on UCloud. This is a very powerful tool because it allows more people to interact with the same model,” says Emiliano Molinaro.

For more details, visit the Chat UI Documentation and Text Generation Documentation.

New Category

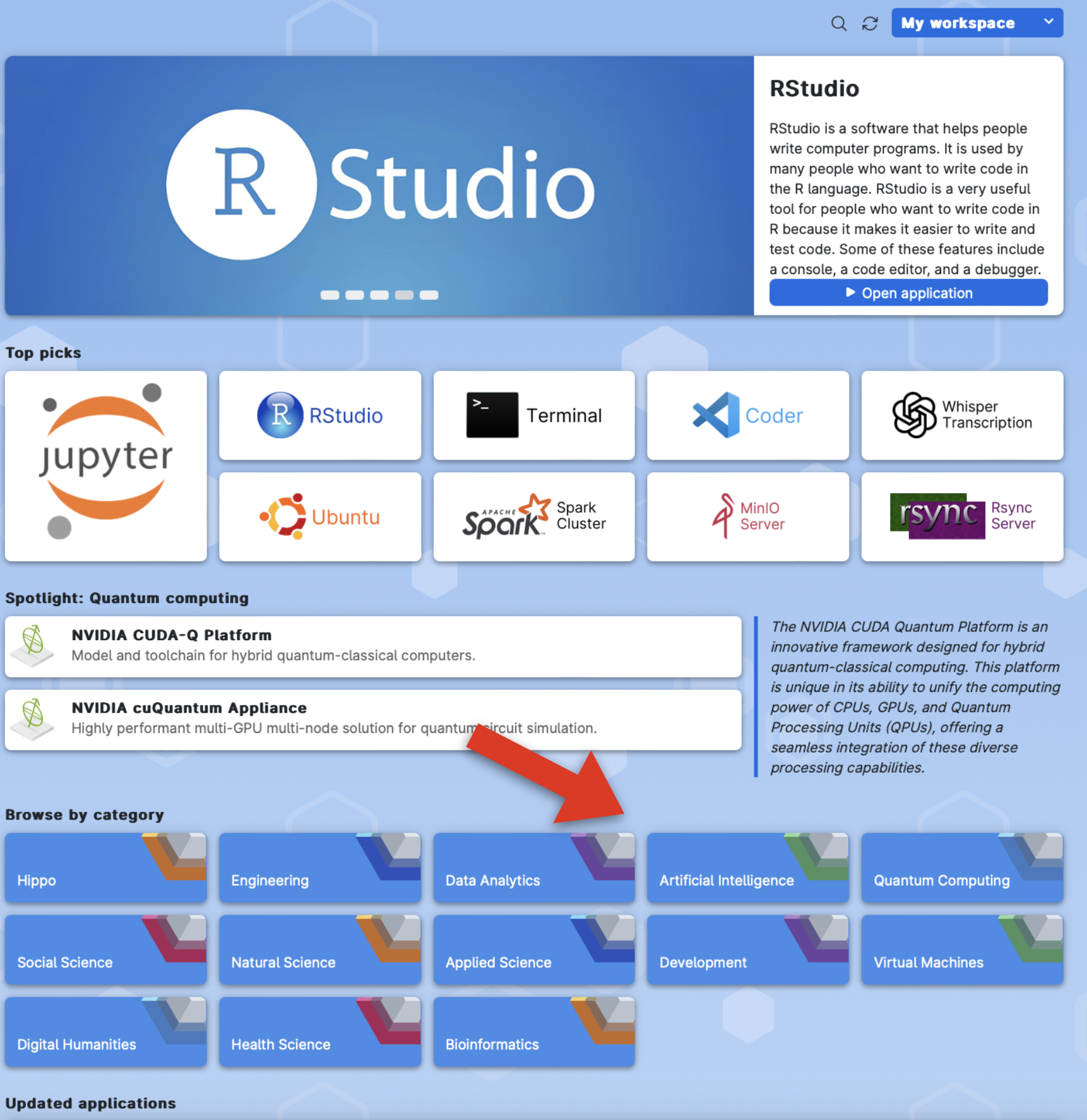

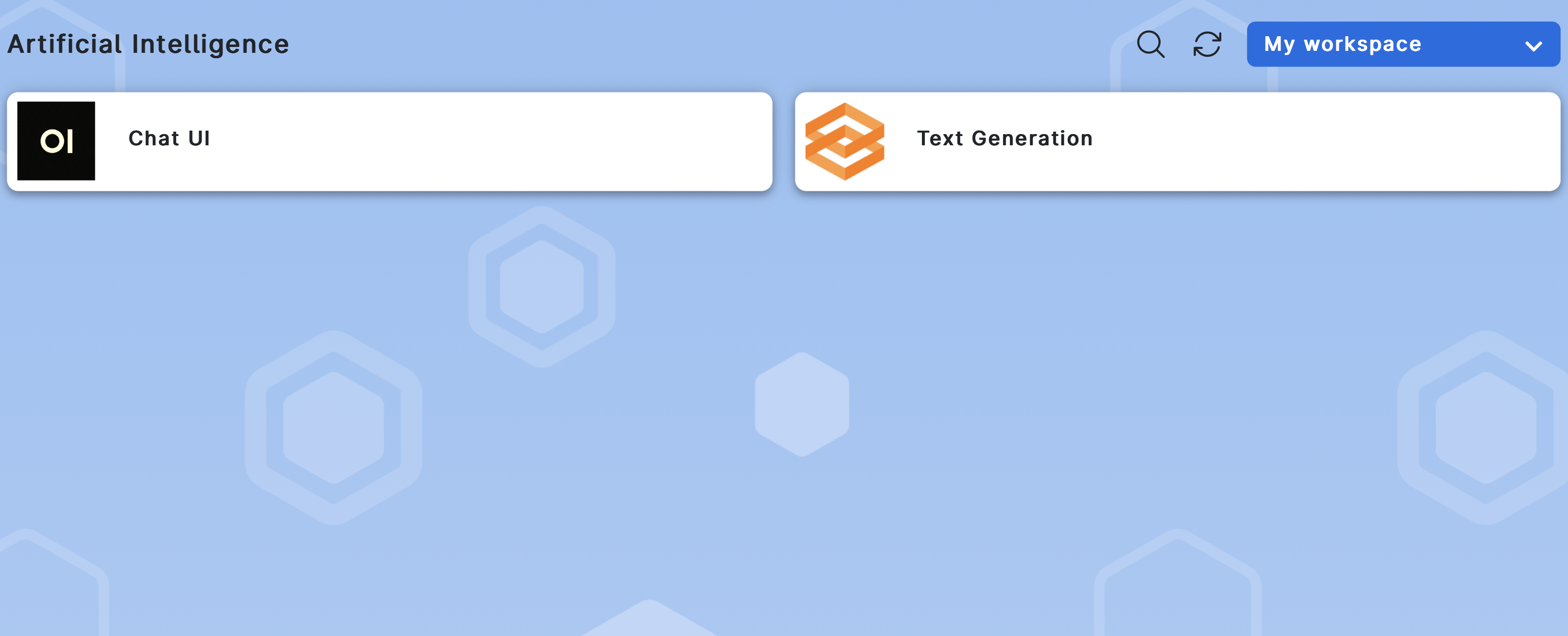

If you visit the App Store on UCloud, you will see a new category called “Artificial Intelligence”.

By clicking on the category, two apps will appear: Chat UI and Text Generation.

Over the next period, we plan to add more apps for AI to this category. Currently NVIDIA NeMo and NVIDIA Triton Inference Server are scheduled to be deployed in August. These apps are particularly well suited to run on the NVIDIA H100 GPUs which the consortium acquired at the end of last year.

NB!

All the AI apps on UCloud run best on the available GPU resources. Given the growing interest in AI, these resources can be scarce during normal working hours. If you are currently experiencing problems with lack of resources on UCloud, please have a look at our guide: Interactive HPC Best Practices.

If you are planning to use any of the AI tools on UCloud extensively, we would encourage you to contact the Front Office of your home institution as soon as possible so that we can help you utilize the available resources in the best manner. An example of extensive use could be if you are a teacher and would like to spin up chat-bots for all the students in your class. It could also be if your entire department or center plans to use ChatUI for sensitive document querying.

The DeiC Interactive HPC consortium is working hard to secure more hardware to the popular UCloud platform. 16 more H100 GPUs are expected to be delivered later this year, and we are also hoping to secure additional funding for GPUs to accommodate the needs of AI researchers in Denmark. The consortium can also host dedicated hardware for research groups and departments, please get in contact with us at the SDU eScience Center if you would like to know more about this possibility.