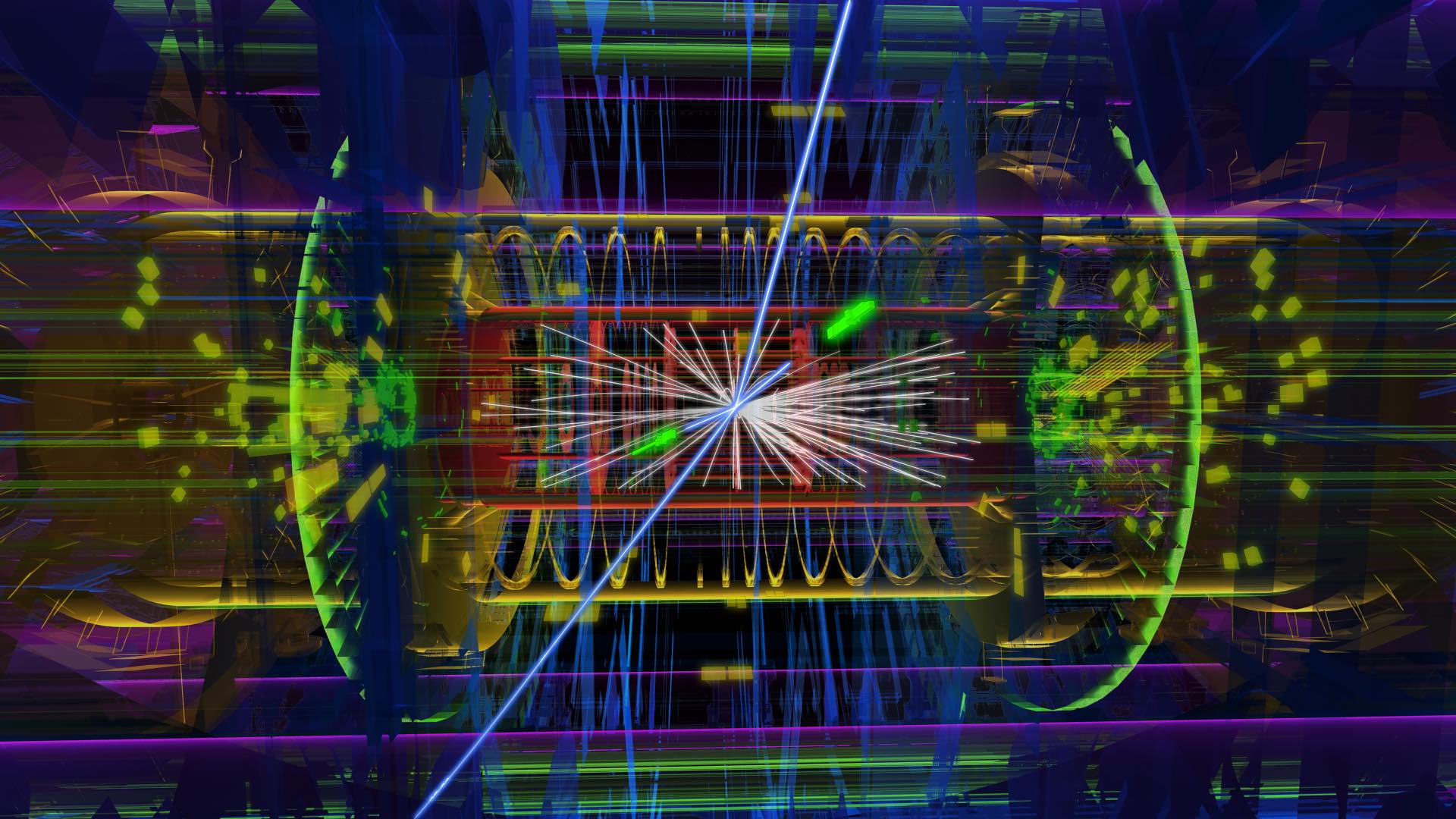

Illustration by ATLAS Experiment/CERN

SDU has a proud tradition of receiving large European HPC grants, and this has not only accumulated knowledge at SDU of how to take advantage of tremendous computing power in research, it has also attracted and maintained staff with competences which enable SDU to run an internationally leading center for research infrastructure such as the SDU eScience Center.

Now, another SDU researcher can be added to the list of European HPC grant receivers – and this time, the grant is the largest that has ever been given for HPC not only to an SDU researcher, but to any researcher in Denmark. Associate Professor at the Department of Mathematics and Computer Science, Antonio Rago, is leading a project which has been awarded 120 million CPU core hours on the European supercomputer, LUMI.

Gigantic simulation

It can be hard to fathom the vastness of the grant that Antonio Rago’s project has received.

As part of the consortium behind the LUMI supercomputer, Denmark has a share of the machine which corresponds to approximately 87 million core hours per year. Antonio Rago’s project alone has received substantially more (approximately 33 million core hours) than what the Danish e-infrastructure Consortium (DeiC) and the eight Danish universities, who allocate the Danish resources on LUMI, can allocate to all Danish researchers on a yearly basis.

Antonio Rago’s grant could also be compared to an average laptop, which typically contains between four to eight CPU cores. If Antonio Rago’s grant were to run on a laptop with eight CPU cores, the job would run for 625.000 days – i.e. 1712 years.

Needless to say an average laptop would not be able to fulfil the computation required for Antonio Rago’s project. In fact no better computer than LUMI can be used for Antonio Rago’s project as Rago and his group have designed their code specifically for this machine:

“Our simulations are devised to take full advantage of LUMI’s features: powerful nodes with extremely fast interconnection. Meaning that each node can talk with any other one in a very swift and efficient way, effectively summing the computation capabilities of all the nodes together. It is then the sheer size of the machine that allows me to run my gigantic simulation,” says Antonio Rago.

LUMI is an abbreviation for “Large Unified Modern Infrastructure” and is located in CSC’s data center in Kajaani, Finland. It was recently ranked as the fastest supercomputer in Europe and the third fastest in the world.

Terribly tough measurements

Antonio Rago’s field of research is particle physics – more specifically lattice quantum chromodynamics (QCD), a field known for its use of heavy computation.

To use an analogy, Antonio Rago explains that his method could be related to the generation and analysis of a painting, e.g. of the Mona Lisa, where the task is to perform some type of measurement, e.g. of the size of the nostril of Mona Lisa. If the pixels of the painting are too rough, you cannot see the Mona Lisa, let alone measure the nostril. You need a resolution of e.g. a few millimetres.

Now imagine that in the same painting of Mona Lisa, with a resolution of a few millimetres, you also need to include Mont Blanc in its original size. And you need to develop a type of measurement that is extremely precise and relevant both for the nostril of Mona Lisa and Mont Blanc. This may provide some insight into the difficulties that Antonio Rago and his research group are facing – the particles that they need to measure can namely be extremely different in size and weight.

“You have to imagine that I want to deal with a matrix of 1020 elements. It is a gigantic amount of data and I want to act on this matrix within some very specific precision for every single element. So you really need a gigantic supercomputer and to have a very precise understanding both of the algorithmic properties and of the hardware, because you cannot afford to lose any information. It’s a terrible tasks,” Antonio Rago admits.

Searching for the origin of asymmetry

The terrible task could be worth the trouble in the end, however, because it might lead us to a better understanding of such big open questions as: why are we alive?

In the universe, there is unbalance between matter and anti-matter. Matter is essentially everything we see around us – from the smallest grain of sand to large planets in the universe. Anti-matter is the opposite, i.e. what is lacking, or matter that is reversed under charge. Since the Big Bang, there has been a competition between matter and anti-matter. They were once equal in size. But some mechanism, which is still unknown to scientists, has resulted in an overwhelming victory of matter over anti-matter, leading to the universe that we know today, which contains comparatively little anti-matter.

The search for this mechanism is exactly where Antonio Rago and his research group believe that they can contribute with a new tool called ”master field simulation”:

“The lattice approach – i.e. the measurements done via simulations on a computer – has somehow reached its saturation. It can not go any more precise than it is now. We are pretty confident that the master fied simulation will allow for a new era for this measurement,” says Antonio Rago.

HPC at SDU

Though the 120 million CPU core hours is the biggest grant Antonio Rago’s group has ever received, they have an excellent track record of receiving big HPC grants in the past. This was indeed stated as one of the reasons why the EuroHPC JU allocation committee decided to accommodate the large request for HPC resources.

In this sense, the record breaking grant illustrates the enormous potential arising out of the current HPC environment at SDU, which allows both new and upcoming researchers to explore exciting new territories and for leading experts to share knowledge, enhance their skills and attract talents to SDU.

“The excellent HPC environment at SDU is crucial because none of this can be brought to fruition without a huge amount of development, both for the algorithms and the code implementation. All of this can only be done first in some sort of safe environment where you can scale up your information. In the end, I could burn up all the 120 million core hours if something goes wrong. It’s daunting. So I need to be absolutely sure what I am doing is predictive,” says Antonio Rago.

The first researcher to receive a European HPC grant at SDU was Prof. at the Department of Mathematics and Computer Science and Director of the SDU eScience Center, Claudio Pica, in 2012. Prof. Claudio Pica’s project was awarded 22 million core hours on FERMI hosted by CINECA in Italy. Since then, many more have followed, including Prof. John Bulava, who is no longer employed at SDU, Prof. Carsten Svaneborg and Prof. Himanshu Khandelia.

“The beauty is that the vast deal of the knowledge is already available here at SDU to take advantage of. It’s a kind of astonishing story, and a fantastic opportunity, also for SDU. And with the revolution of increasing data availability, the benefits do not only effect my research field. The effect of HPC is going to be more and more relevant for everyone,” says Antonio Rago.

Transitioning to GPUs

Despite the vastness of the grant that Antonio Rago and his group have just received, they recently submitted an application for an even larger grant – this time for 130 million CPU core hours on LUMI and, on top of this, 500.000 GPU node hours on another EuroHPC JU supercomputer, Leonardo. For this application, Antonio Rago is not the project leader. However, as part of the group, he will still contribute to the project.

The GPU (graphics processing unit) contains thousands of small cores which can perform simple tasks many thousand times faster than a CPU. With GPUs you can therefore do substantially more computation and at the same time, the machines will take up less space and require less cooling – thus be more environmentally friendly.

But transitioning to GPUs requires that researchers are able to fraction and parallelize their code. This is a huge task and, to some extent, a pioneer task, since the majority of the research community is accustomed to running their code on CPUs.

“Everyone in our field is trying to take advantage of these disruptive technologies. It takes years to be done, but we are there, and yes it will be more powerful. This also goes with the story of why it is so crucial to work at SDU. We are collaborating with colleagues at SDU, students and the SDU eScience Center to develop the transition of one of these codes to the GPU setup that is way more powerful than the CPU core hour computation,” says Antonio Rago.

More information

EuroHPC JU calls, including the Extreme Scale Access Mode calls which Antonio Rago and his group applied for, are open on a continuous basis and you can apply for resources on all the eight EuroHPC machines. Find out more here.

National HPC resources are available to all Danish researchers. The SDU eScience Center is hosting two of the services, one of them in collaboration with Aalborg University and Aarhus University, where you can also apply for GPU resources. Find out more here.

If you are employed at SDU and you would like to test the SDU eScience Center’s services or know more about how we can help you in your research, please contact support@escience.sdu.dk. The SDU eScience Center can also help you in your journey to transition your code to GPUs and with an application for a EuroHPC or national HPC grant.