Image: NVIDIA Hopper H100 GPU. Credit: NVIDIA

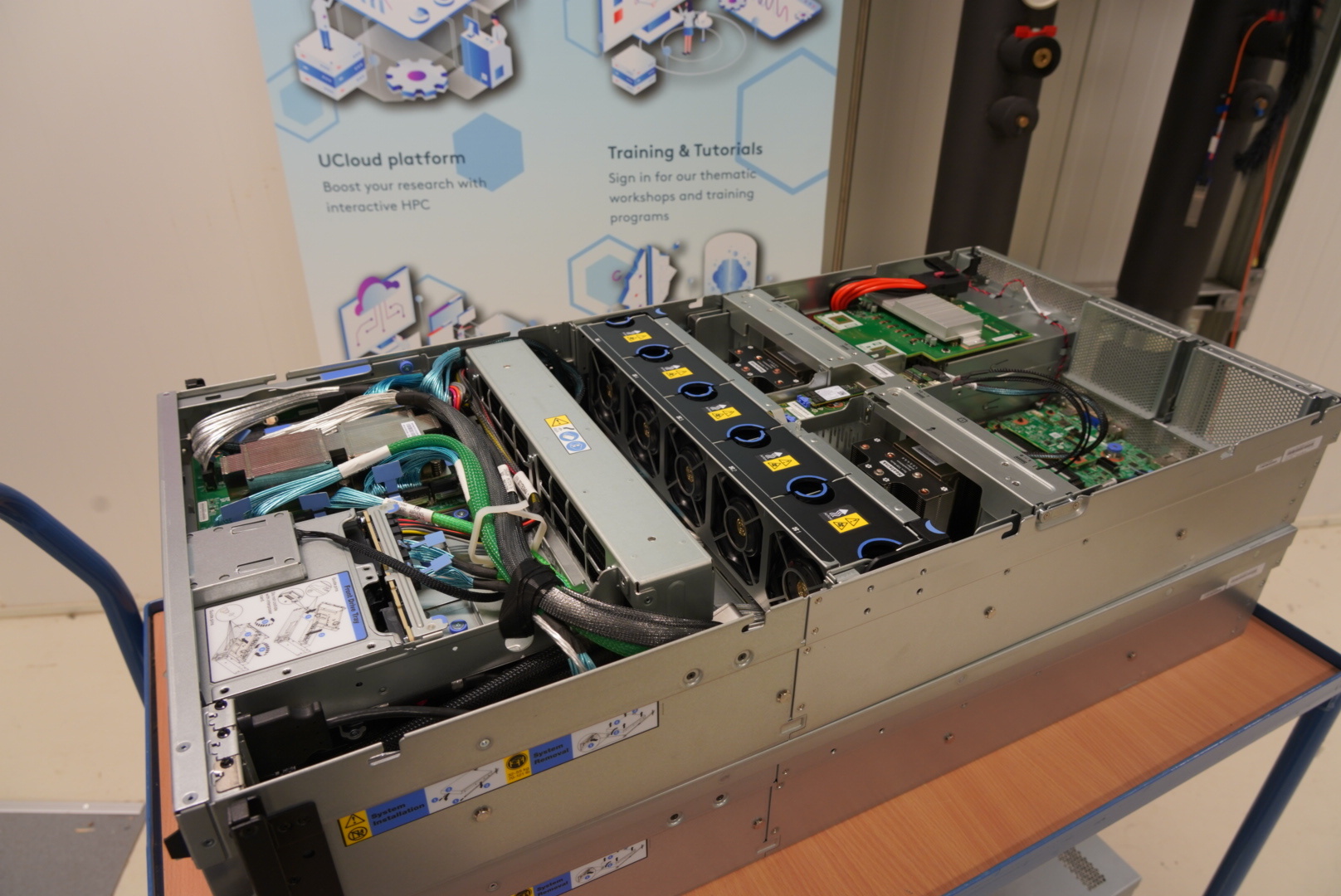

AI companies around the world are scrambling to get their hands on the latest and most powerful NVIDIA GPU called H100. The biggest costumers include OpenAI, Microsoft and Google. Now, 16 NVIDIA H100 GPUs have landed at SDU, ready to be integrated into the DeiC Interactive HPC system.

What is a GPU?

The GPU (graphics processing unit) contains thousands of small cores which can perform simple tasks many thousand times faster than a CPU (central processing unit). If you know how to fraction and “parallelize” your code, you can do substantially more computation on a GPU than on a classical CPU. The machines furthermore take up less space and require less cooling for the same amount of computation – thus they are more environmentally friendly.

Originally developed for computer games, the GPU turned out to be uniquely well suited for machine learning and AI. The high resolution graphics needed for computer games requires code that run more efficiently in “parallel” on small, fast cores (as opposed to in a sequence on one large CPU core). AI and machine learning similarly requires the same kind of computation to train algorithms from data.

“Today GPUs are a core technology not only for the HPC world but for the whole society. The large impact that AI has produced in recent years is due to the advances of GPUs. A very large part of todays research and industrial applications runs on GPUs. This trend will continue and even increase in the future,” says Claudio Pica, director of the SDU eScience Center and professor at the Department of Mathematics and Computer Science at SDU.

The powerful H100

With the arrival of 4 servers with 4 H100 GPUs each at SDU, Danish researchers will be able to access the same hardware coveted by some of the biggest tech companies in the world. OpenAI’s ChatGPT reportedly runs on thousands of NVIDIA A100 chips (previous generation of NVIDIA GPUs).

The Hopper architecture, which includes the H100 GPUs, is the latest release from NVIDIA. The architecture is named after Rear Admiral Grace Hopper, who worked on some of the first general-purpose computers and was responsible for major advances in programming languages.

“When talking about raw computational power, the H100 is generally 2-3 times faster than the previous A100 generation, and a lot faster for AI workloads. With 80 GB of memory, they also have twice as much memory as our current A100 cards. Moreover, the new machines use the SMX5 socket, which enables simultaneous non-blocking NVLink communication between all GPUs in the machine. This is particularly important when using multiple GPUs at the same time because the performance depends on how fast you can transfer data between the GPUs,” says Martin Lundquist Hansen, team leader for research infrastructure at the SDU eScience Center.

Another advantage to the H100 card, is that it has MIG (Multi-Instance GPU) support, which makes it possible to split the GPU into multiple smaller GPUs.

“Because the H100 is so powerful, for some workloads it might not make sense to use the entire GPU. It would also allow us to give GPU resources to many more people at the same time, for example when GPUs are needed for teaching a course. Using this MIG feature, each of the new machines can in principle provide up to 28 GPUs,” Martin Lundquist Hansen explains.

How are GPUs used in research?

AI and machine learning has become increasingly relevant for several research areas, including robotics, precision medicine, digital humanities, bioinformatics, material science, drug discovery and fintech – just to name a few.

At the Center for Humanities Computing, Aarhus University, professor and center leader, Kristoffer Nielbo, can vouch for the growing need for state-of-the-art GPUs.

In applied NLP (Natural Language Processing), GPUs are crucial for the training and inference of large language models. The need for GPU resources is growing exponentially, driven by these models’ increasing complexity and size. The H100 GPUs are transformative in this context because they promise a 30X increase in performance and a Transformer Engine that can handle trillion-parameter models. This massive leap in performance directly caters to the needs of our field, enabling us to tackle more complex AI challenges and develop and use large language models with greater efficiency,” says Kristoffer Nielbo.

There are many other research areas, which rely heavily on GPUs. To name a few, one could list: Physics and bio-physics simulations, virtual prototyping and digital twins, Finite Element Model simulations and data analytics in general.

Secure, interactive access

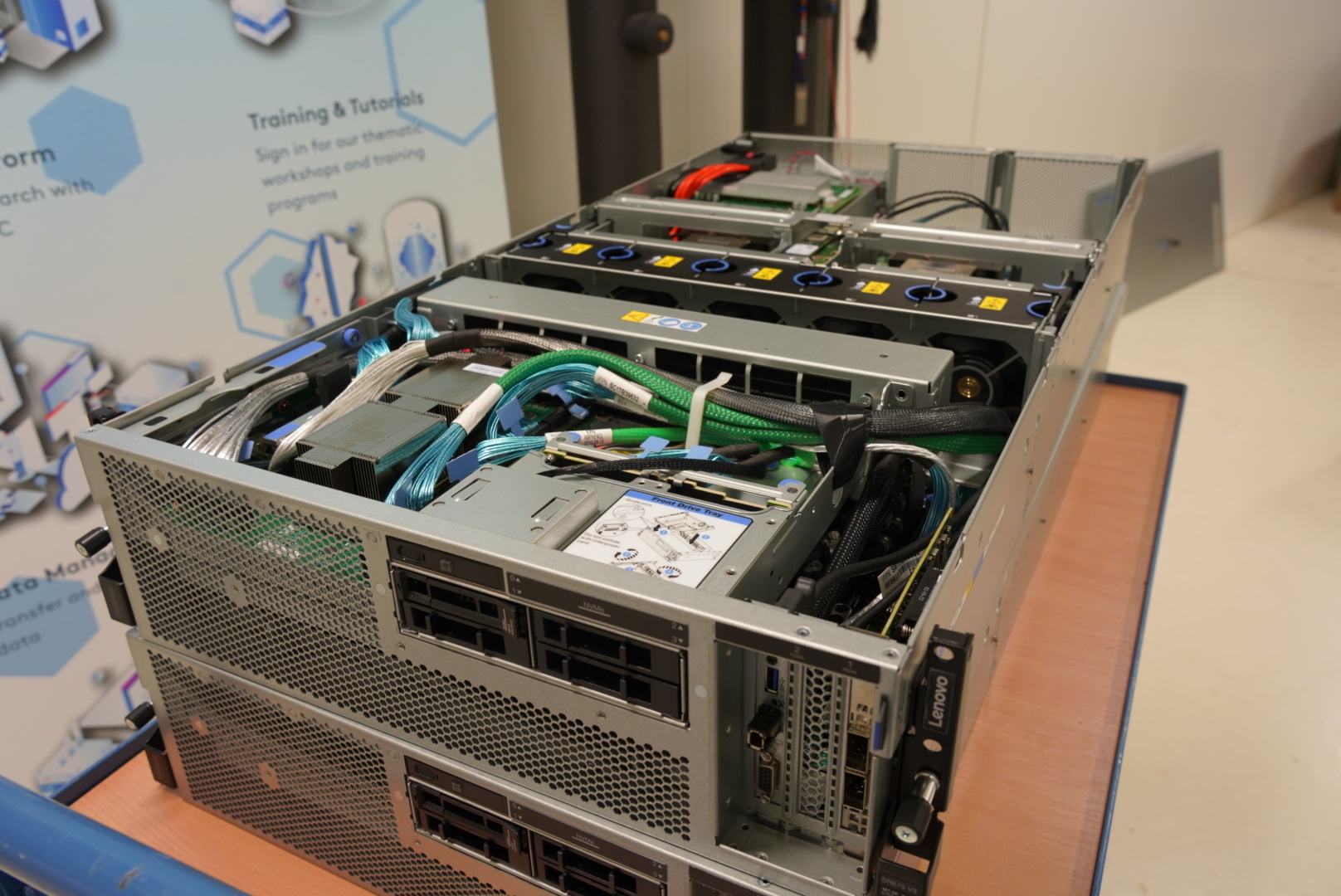

The 16 H100 GPUs will become available via the national HPC service, DeiC Interactive HPC, which is run by a consortium of universities consisting of Aarhus University, Aalborg University and University of Southern Denmark. DeiC Interactive HPC is based on the UCloud platform and the H100 GPUs are not the only type of NVIDIA GPUs available via the service: A100, A40, A10 and T4 are also available.

“UCloud has been a game changer for many Danish research communities. It allows researchers to have interactive access to the resources they need for computing and data analysis in a much better, simpler and more secure way than any other system previously available. UCloud contains a large catalog of “apps” spanning all research areas which users can select and run with a few clicks. In addition, researchers can bring their own software environment when needed. This enables researchers to start interactive applications like Jupyter notebooks or RStudio on powerful GPU servers in seconds,” says Claudio Pica.

All DeiC Interactive HPC GPUs are within the scope of the SDU and AAU data centers’ ISO/IEC 27001 certifications – an international standard for information security. They are extremely secure and can be used for analysis of sensitive data.

How do I get access?

Once the newly acquired H100 GPUs have been installed at SDU, they will become available via UCloud. If you are a Danish researcher, you can apply either

- for resources managed by your local front office via the UCloud interface, or

- for resources managed by UFM via DeiC national HPC calls.